BLOGS WEBSITE

MEDEA

MEDEA – Model Driven Performance Prediction

The MEDEA Performance Modelling Environment is an MDE-based System Execution Environment that extends beyond the modelling and analysis of individual systems to the evaluation and performance prediction of an integrated SoS. A System Execution Modelling approach involves the development of executable models that allow a model of the system in question to be built and deployed onto hardware similar to (and sometimes identical to) the real platform’s infrastructure. Whilst the functionality of the systems components may not be accurate early in the process, the deployment allows performance tests of an integrated system to be conducted very early in the design stage, highlighting problems that are usually only found toward the end of projects with traditional design methodologies. More information about our research in MEDEA is available here and here.

MEDEA supports modelling of representative snippets of behaviour of the constituent systems of an SoS and of their interactions. This is achieved through a family of DSMLs that model the structure and behaviour of the constituent systems, the workload that will exercise these models in different performance scenarios, and the interactions between these constituent systems. These models are then deployed on a realistic, distributed, real-time hardware testbed and executed. The results of the execution are collected and analysed through different metrics. These metrics are presented to the system expert, who decides which aspect of the SoS architecture will evolve. These evolutions are integrated in a new alternative of the SoS architecture, through the modelling DSML family, and the cycle is repeated.

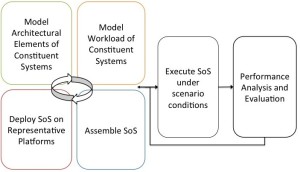

The methodology underpinning MEDEA follows a performance analysis and prediction process, consisting of three phases: modelling, SEM execution, and performance analysis and evaluation, as illustrated below. The process is guided by formulating a performance question, e.g., What is the utilisation of component UAV?. A model of the constituent DRE systems is defined by specifying component interfaces and workload behaviour. The deployment of the constituent systems is specified by assigning the components of models to be distributed on a cluster of hardware nodes. The execution of the SoS uses the defined models and deployment configurations to generate the code for distribution to the selected hardware nodes. During execution, system information is captured and aggregated into performance metrics. The evaluation metrics are shown to the expert through context-specific visualisations that indicate if the model fulfills performance requirements. The experts decision will guide the next iteration of the process, advising modifications of the model and deployment.

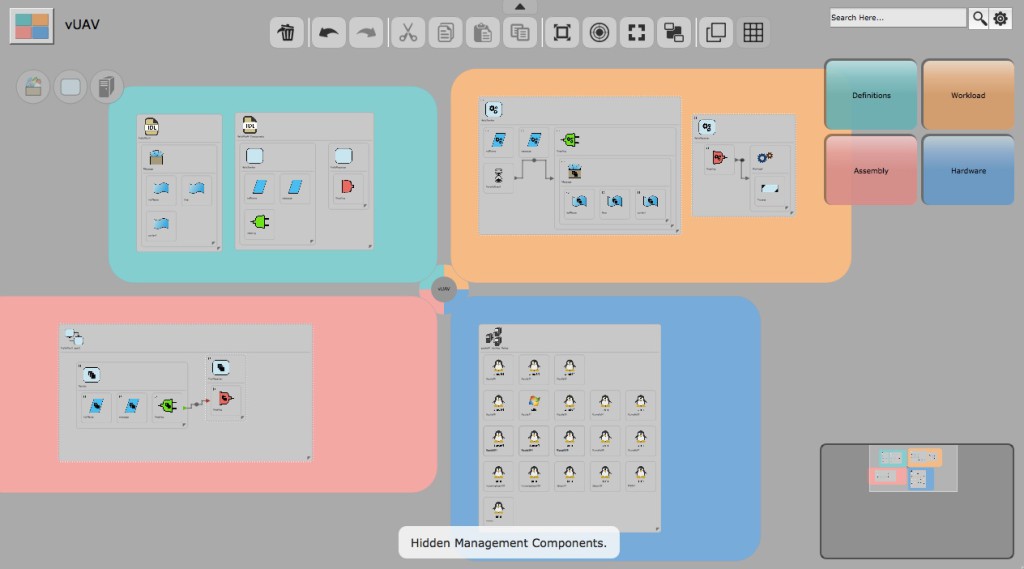

Our MEDEA performance prediction environment supports modelling in six phases, as described above, directed through our MEDEA modelling interface:

The MEDEA process as applied to an Unmanned Aerial Vehicle (UAV) can be viewed on youtube from the following link (using an earlier version of our system interface) https://www.youtube.com/watch?v=JvNwUmOyTT8

1. Modelling Architectural Alternatives of Composing Systems

The first aspect of modelling the constituent systems that form the SoS, is to model the high-level architecture of the system, represented through the components interfaces and aggregated data structures.

2. Modelling Constituent System Workload

Subsequent to modelling the high-level architecture of each constituent system, the behaviour and workload for each system needs to be modelled, enabling performance models to be incorporated for each system according to its core behaviours. For each component defined within the system’s corresponding interface definition, a component implementation is required. Behaviour is modelled either through initiation via a periodic event, or via the receipt of an event through a designated event port, supporting independent operation, and communication between system sub-components and between systems in the SoS. Within a model, branching constructs support constraint-based decision making resulting in different workloads to be executed according to environmental conditions. Workload models comprise of CPU, memory, and I/O workloads and are defined via an algorithmic complexity statement, which may be parameterised through received data.

3. Modelling of Systems of Systems

The SoS is composed through the integration of multiple constituent systems. Within the MEDEA performance modelling environment, integration of systems into a SoS is performed through the instantiation of assemblies. The behavioural and workload models defined above form a generic pattern, from which multiple system instances may be created, each following the same behaviour but operating independently. The initial stages of this process consist of the creation of individual system assemblies, whereby component instances based on the behaviour and workload models defined in the previous modelling stage are instantiated within a defined assembly. In this process, connections are made between the in-going and out-going event ports in each component, validated through the interface definitions of available event types. Where external input is sought for a system, or where external output is expected, an assembly may be annotated with in-going and out-going event ports, of corresponding event types.

A SoS may consist of a variety of systems from independent sources, including custom-developed, COTS and legacy systems. To support the integration of such systems, for which models may not easily be constructed, or may not exist, a ’blackbox’ system may be modelled through its architectural interface, and then integrated as an external system within the SoS assembly. At the end of this stage in our modelling methodology, the SoS, combining all modelled and blackbox constituent systems, is ready to be executed on a representative deployment platform.

4. Deployment

In a SEM-based modelling environment, executable models of workload and behaviour representing the SoS are executed above a representative deployment platform in order to obtain performance data for that specific configuration and scenario. The final stage in our methodology is the modelling of how these systems will be deployed above the available hardware test-bed.

5. MEDEA SEM-based Execution

In the observation stage, MEDEA executes the generated code within its indicated deployment to produce execution traces and basic metrics about the system performance. Both hardware and software metrics are captured. Hardware metrics include cpu and memory utilisation, while the application-defined software metrics are associated with workload and network latencies. The execution traces produce a large amount of raw information which is processed by an evaluation engine that aggregates the data as specified. Aggregated performance information is passed to the MEDEA visualisation component to provide the user with an overview on the causes of particular performance values.

The MEDEA evaluation phase uses an interactive visualisation tool. This tool is designed to show relationships, activities and alert or error conditions to indicate whether the model is working correctly and where constraints are not being met. It is possible to show physical relationships between components and hardware nodes as well as the connections between them. The visualisation tool includes a notification system that provides a focal point to the user when specific events, constraint violations or items of interest occur within the model. Combined with the ability to scale up and down so as to see all of a large model from a high level, while still being able to see all details for an individual component, this approach makes the visualisation system a powerful tool in understanding if and how the system is meeting the performance requirements.

The MEDEA tools used for Observation, Aggregation and Visualisation continue to be improved and developed, and we are interested in collaborators to help us improve and explore our performance prediction methodology. The main contact for this research is A/Prof Katrina Falkner.